Scatterbrained Saturday: The New ChatGPT

GPT-5, vibe-coding, and why I feel (slightly) more optimistic that AI won't take our jobs anytime soon

Two days ago, OpenAI released GPT-5: its newest artificial intelligence model. Announcing its release, they declared it was “state-of-the-art across coding, math, writing, health, visual perception, and more.” But in practice, it’s less of a massive upgrade and more of an incremental improvement over its predecessors—one that may reset near-term expectations for the industry.

A More Natural Speaking Style

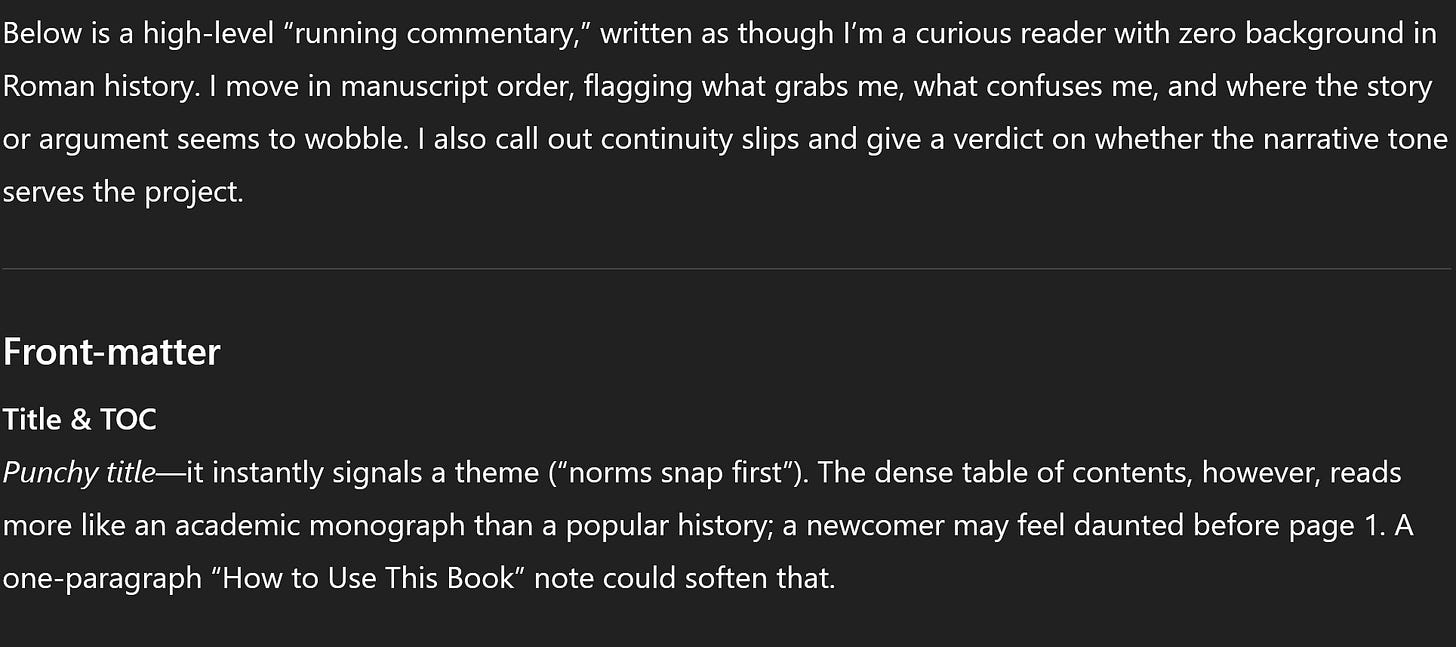

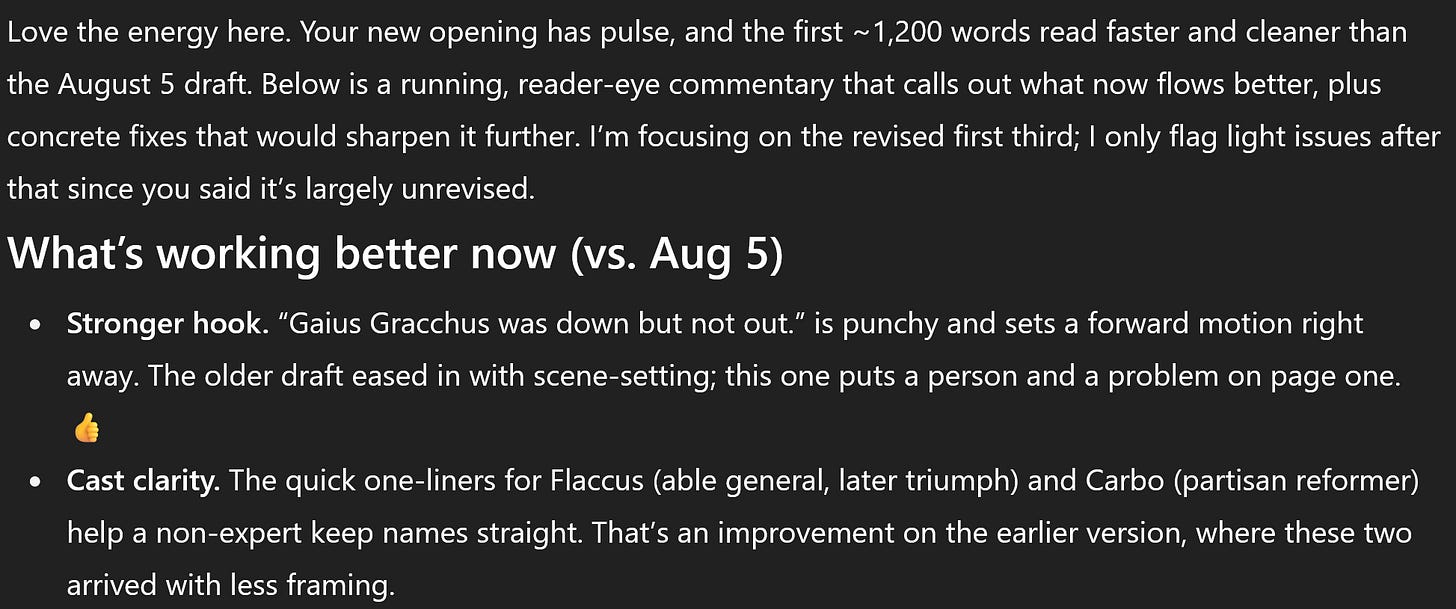

Here’s a comparison of two conversations I had with ChatGPT. Both have a near-identical premise: I’m revising The Unwritten Rules Break First, and I want a running commentary on what flows and what doesn’t. See for yourself how each model communicates:

GPT-5 is sharper and more concise than o3. It’s still not impossible to tell that GPT-5’s response is AI-generated (adjectives such as ‘reader-eye’ are a dead givewaway). But it’s far harder to know than before.

This has unnerving implications. I worry that GPT-5 makes it more difficult to detect AI-generated text in the real world. It’s already tough for most people, but if it becomes impossible for even experts to know? Verification becomes nearly impossible for organizations lagging behind the latest technology, especially schools, businesses, and online platforms. That said, it’s hard to tell for sure if these changes are limited to my GPT-5 experience or if they’re a global change. So there’s a small chance this is a one-off occurrence and not some earth-shattering revelation.

Increased Coding Capabilities

I’ve experimented with GPT-5 on this front the most, and I can confidently claim that its coding abilities are vastly improved compared to previous models. I’ve tried vibe-coding with ChatGPT in the past (letting AI generate programs for me), but I always felt it was vastly inferior compared to its competitor, Claude. Now it’s different.

This is Home Library. It’s a competely AI-generated application that tracks my personal book collection. And it only took GPT-5 about 36 hours of back-and-forth to make it work. I can import files to fill out my collection or search for books to add. I can click on each title to see its authorship and release date. There’s even a statistics panel to track how many books I own or how many pages I’ve read. This is a fully fleshed-out, AI-generated application that works.

However, it took considerable debugging for the code to function properly. GPT-5 is surprisingly strong at creating finished products from scratch, but it broke down once I asked for additional features. In this case, I tried adding file-sharing and automatic book information lookup, and it took most of yesterday to resolve the accompanying bugs.

Because of my involvement in getting Home Library across the finish line, I believe we’re nowhere near the point where these models can code everything on their own. I suspect human oversight will be needed to manage these tasks for the foreseeable future, as GPT-5’s coding capabilities aren’t fully developed yet.

Unresolved Issues

GPT-5 is better than before, but it’s limited by the surrounding environment. For example, Canvas (OpenAI’s code workspace) often blocked edits to the Home Library project. This forced me to abandon the platform in favor of a brute-force approach where GPT-5 provided me with code and directed me to paste updates into my project files. Not a major issue, but frustrating nevertheless.

Additionally, GPT-5 taxed my computer, even though it’s brand new and is quite powerful (an Intel Core 7 CPU, RTX 4050 GPU, and 32 GB RAM). I had to generate most of Home Library on my phone so that my computer wouldn’t freeze. This could be an issue with the project’s size (over 1,000 lines), but I don’t recall these problems occurring with earlier OpenAI models.

We’ve Hit A Wall

Thinking broadly, perhaps the most crucial takeaway is this: OpenAI likely spent years and millions of dollars on this model, and it didn’t result in the massive productivity increases of yesteryear. Does this indicate that the AI boom is slowing down? Maybe.

Recall that many promises around AI are the same that ran through the Internet world in the late 1990s. The latter ended in a stock market crash, as investors realized the products they were getting didn’t live up to the hype. The Internet revived itself in the following decades, but many in the industry switched from the idealism of the past to the realism of today.

I wouldn’t be surprised if the same thing happens here. Just like 30 years ago, we’re seeing massive investments in thousands of AI companies from public and private organizations alike. If Big AI fails to deliver strong upgrades when existing models hit their limits, investors will wake up from their slumber and pull their backing.

As an AI-realist, I found reasons to be both optimistic and pessimistic about the field after using GPT-5. I think this is as good as AI gets for now: models with strong reasoning and coding skills that can’t think for themselves. Barring another sea change (for example, increased usage of AI ‘agents’ that control computer programs), I think this is where we’ll be for a while. Have a great weekend!

Great article Mike! Love the Home Library application!